Interesting Articles I've Read in 2023

Below is a collection of interesting articles I’ve read in 2023. Several papers focus on foundations or methodology ranging from mathematical logic and history to causal inference. Two papers look like jokes or hoaxes but are insightful in the end. We consider the history of women in analytic philosophy and the first law and economics program from the early 20th century. There is a primer on synthetic data for policymakers and a short look at working with the pseudoinverse for highly structured matrices. Finally, we survey a scuffle between internet subcultures and consider income inequality globally.

If you have some thoughts on my list or would like to share yours, send me an email at brettcmullins(at)gmail.com. Enjoy the list!

What do we want a foundation to do? Comparing set-theoretic, category-theoretic, and univalent approaches

Author: Penelope Maddy

In the collection Reflections on the Foundations of Mathematics: Univalent Foundations, Set Theory and General Thoughts

Published: 2019

Link: Springer

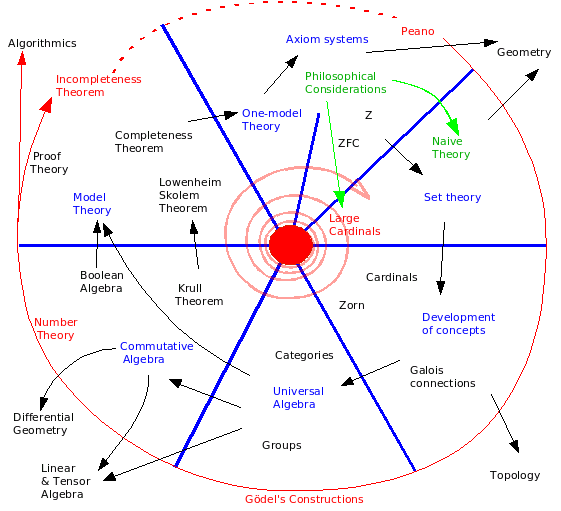

Set theory is the usual foundation for mathematics. It fills this role since most of mathematics is formalizable in the language of sets and it provides enough structure to reason about metamathematical propositions. In the latter half of the 20th century, category theory emerged as a possible competitor and, in the 2010s, homotopy type theory entered the fray. This article discusses the notion of a foundation for mathematics and what sort of things we expect a foundation to do. Maddy argues that the three candidate notions satisfy different foundational virtues but should be understood more as differing perspectives rather than direct rivals.

Set theory satisfies several foundational virtues. Set theory is a generous arena by being flexible enough to express most of mathematics. Similarly, it acts as a shared standard, since we can import results from one area of mathematics to another without too much worry. Finally, it acts as a metamathematical corral by being expressive enough to allow for metamathematical analysis. For instance, using large cardinal axioms of increasing strength, we can measure the relative consistency of a theory to assess risk of inconsistency.

Category theory developed in the 1940s from work in algebraic geometry and algebraic topology, and, in the 1960s, some viewed category theory as a plausible alternative foundation to set theory. Maddy dismisses the notion that category theory can fill the same roles as set theory; however, category theory does have the benefit of providing essential guidance for practitioners. Whereas set theory can be overly expressive with models containing useless constructions, category theory provides a framework to better guide the practitioner in algebraic fields. Interestingly, the virtue of essential guidance seems to conflict with generous arena.

Homotopy type theory or univalent foundations emerged from homotopy theory and type theory in the 2010s. Maddy argues that it’s not clear which of the roles homotopy type theory satisfies other than a new role: proof checking. Homotopy type theory is set up to be integrated with formal verification software such as Coq. Providing essential guidance and proof checking are helpful properties that will benefit mathematicians alongside the roles filled by set theory. Maddy closes with

“there’s no conflict between set theory continuing to do its traditional foundational jobs while these newer theories explore the possibility of doing others.”

Clues, Margins, and Monads: The Micro-Macro Link in Historical Research

Author: Matti Peltonen

Publication: History and Theory

Published: 2001

Link: JSTOR

Microhistory emerged in the 1970s with case studies such as Carlo Ginzburg’s The Cheese and the Worms: The Cosmos of a Sixteenth-Century Miller (1975). The idea of this approach was not just to zoom in on an unusual and interesting region, quarrel, or figure, but to use this instance to infer unacknowledged or unrecorded events or trends. The Cheese and the Worms follows the exploits of a heretical sixteenth century Italian miller called Menocchio who argued for his unorthodox views in the ecclesiastical courts only to face the wrath of the counter-reformation. Ginzburg takes Menocchio’s beliefs as evidence of a deeply-held peasant religion that had not been well-recorded.

This approach to history seeks to identify these “clues” which are “taken as a sign of a larger, but hidden or unknown, structure. A strange detail is made to represent a wider totality.” In this way, observation of the micro leads to inferences about the macro. Peltonen surveys similar approaches to Ginzburg including Giovanni Levi’s approach to clues, Walter Benjamin’s monads, and Michel de Certeau’s margins among others.

Peltonen contrasts microhistory with the adoption of microfoundations in social science models in the 1970s, best exemplified by the Lucas critique. Echoing a common critique of microfoundations, Peltonen notes that such models reduce all macroeconomic behavior to the interaction of microeconomic agents without inferring back to the macro state.

Particular formulae for the Moore–Penrose inverse of a columnwise partitioned matrix

Authors: Jerzy K. Baksalary and Oskar Maria Baksalary

Publication: Linear Algebra and Its Applications

Published: 2007

Link: Click here

This paper proves results for computing the Moore-Penrose pseudoinverse of horizontal block matrices i.e. matrices of the form $A = [A_1 \ A_2]$. In the general case, computing the pseudoinverse of $A$, denoted $A^+$, doesn’t take advantage of $A$’s block structure. The authors prove useful results to utilize this structure.

The most useful result shows that the pseudoinverse distributes over the blocks when the blocks are orthogonal. That is $A^+ = [A_1^+ \ A_2^+]^T$. The intuition is that mutually orthogonal matrices express different information. This result is similar to reasoning about the probability of independent events. To calculate the probability of two coin flips landing heads, you only need to know the probability that each lands heads. Similarly, to compute the pseudoinverse of a horizontal block matrix of mutually orthogonal blocks, you only need to know the pseudoinverses of the individual blocks.

Every Author as First Author

Authors: Erik D. Demaine and Martin L. Demaine

Publication: Proceedings of SIGTBD

Published: 2023

Link: arXiv

I’ve always held that the best spoofs are also effective entries in their respective genre. For instance, Scream is not just Wes Craven poking fun at tropes of the slasher film; it is itself an effective slasher!

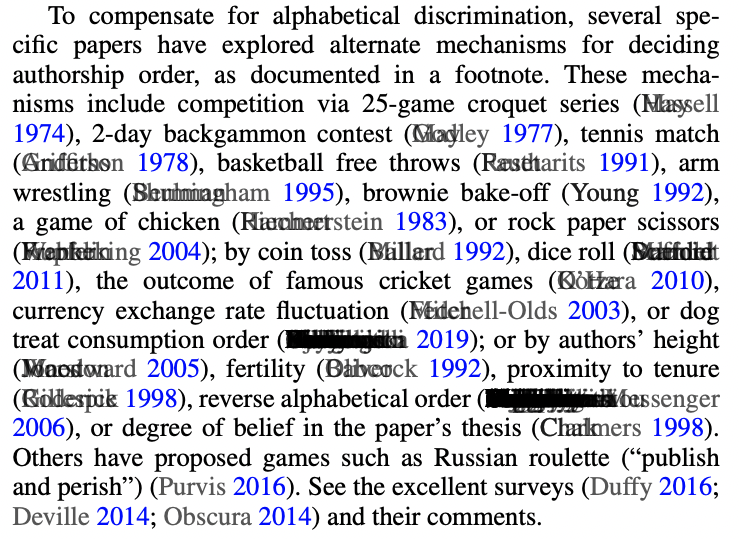

This paper proposes a scheme for fairly listing author names when citing papers by overlaying all of the names (pictured to the right), simultaneously addressing several issues that creep up in research. At first glance, this is just an amusing joke in a joke paper at a joke conference. On second thought, there’s some depth here. This is a well organized paper. The authors implemented their proposal in $\LaTeX$ and sketched out a solution for HTML. They even anticipate a challenge I had to the fairness of their solution in the conclusion. Bravo!

The First Great Law & Economics Movement

Author: Herbert Hovenkamp

Publication: Stanford Law Review

Published: 1990

Link: JSTOR

Hearing of the law and economics program, one immediately thinks of the University of Chicago with Friedrich von Hayek, Milton Friedman, and others as discussed, for instance, in Rationalizing Capitalist Democracy from my book list for 2021. This paper surveys a prior law and economics movement which stretched from the anti-trust era and the marginal revolution in the 1880s to the rise of ordinal utility theory in the 1920s and 1930s.

Much of this first movement sought to use material or other objective measures of welfare (even if imperfect) to reason about policy. There are certainly parallels between this and what John Rawls was up to in A Theory of Justice. Over time, this strategy increasingly informed public policy and judicial reasoning; however, it fell out of favor in the academic literature by the ordinalist movement, which argued against interpersonal comparisons of utility.

Analytic women: The lost women of early Analytic philosophy

Authors: Jeanne Peijnenburg and Sander Verhaegh

Publication: Aeon

Published: 2023

Link: Click here

The vast majority of scholars become forgotten to history. Two pathways to being forgotten are the historical and the historiographical. The former is when one’s work is not appreciated by their contemporaries; the latter when one’s work is excluded from the canon. Peijnenburg and Verhaegh survey interesting examples of each pathway from Analytic philosophy in the late nineteenth and early twentieth century.

An example of the historiographical pathway is Susanne Langer, who studied under Alfred North Whitehead at Harvard and became a proponent of logical atomism in the US. Her work was widely regarded and discussed, for instance, by the Vienna Circle in the early 1930s. Her 1942 book, Philosophy in a New Key, sought to apply logical methods to the arts. As she drifted close to the humanities, she subsequently fell out of the account of analytic philosophy in the US and elsewhere. Langer, however, remained relevant in the philosophy of art.

An example of the historical pathway is E. E. Constance Jones, a professor at Cambridge alongside Bertrand Russell and G. E. Moore. Jones offered a solution to a version of Frege’s Puzzle in 1890, two years before Frege’s On Sense and Reference. Her work was largely ignored by her contemporaries being considered too Victorian since it worked within the Aristotelian logic tradition.

Synthetic Data and Public Policy: supporting real-world policymakers with algorithmically generated data

Author: Kevin Jenkins

Publication: Policy Quarterly

Published: 2023

Link: Click here

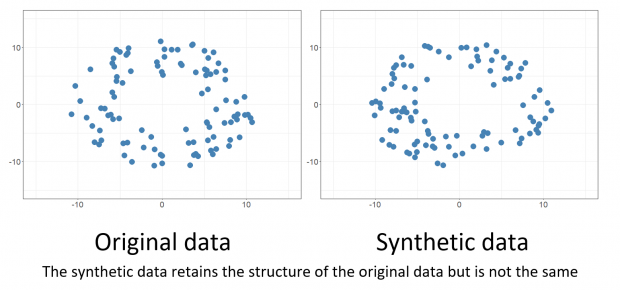

This article is an informal survey of synthetic data with a dash of privacy aimed at policymakers. The opening does an excellent job explaining synthetic data at a lay-level. Particularly, that “synthetic data is generated to preserve the statistical relationships and patterns of the original real-world dataset” rather than just mimic its database structure. For generating synthetic data, Jenkins mentions GANs and VAEs. Regarding statistical relationships, a synthetic dataset is condsidered “lo-fi” if only one-way marginals are preserved and “hi-fi” if higher-order marginals are preserved. Lo-fi synthetic data preserves the distribution of each attribute but no correlations between attributes.

A good bit of space is devoted to privacy matters and - I’m happy to see - differential privacy is discussed. The challenge of privacy researchers is to find “the balance between fidelity and mitigating the risk of re-identification” in synthetic data generation. The general strategy for doing this with differential privacy is to privately choose a set of strongly-correlated higher-order marginals to preserve and make assumptions on the distribution of unmeasured attributes. This paper of mine introduces an algorithm called AIM for privately generating synthetic data for high-dimensional discrete datasets.

Identification and Estimation of Local Average Treatment Effects

Authors: Guido Imbens and Joshua Angrist

Publication: American Economic Review

Published: 1994

Link: JSTOR

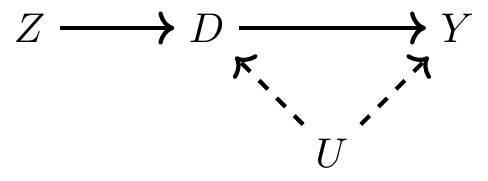

Causal inference is a powerful method in the arsenal of modern empirical social science researchers. This includes more than randomized experiments, since social scientists often want to learn causal effects from observational data. With experimental data under ideal conditions, causal effects are simple to estimate and interpret. For example, for an ideal simple experiment measuring the effect of binary treatment $D$ on outcome $Y$, the average treatment effect (ATE) is estimated as the average outcome for units receiving the treatment minus the average outcome for units without treatment. We can interpret this effect counterfactually as the average change in $Y$ if treatment is received compared to no treatment over the whole study population.

For complex causal models such as those using observational data, we may not be able to obtain causal effect estimates that apply as generally. This short but insightful paper proves identification results within the potential outcomes framework for the instrumental variables (IV) causal model where the estimated effect can be interpreted as the local average treatment effect (LATE). To illustrate this, suppose the instrument $Z$ indicates whether one is assigned the treatment and $D$ indicates whether one actually receives the treatment. The LATE estimate is the ATE for the population of compliers that receive the treatment if assigned but not otherwise.

For a well-known and early example of this approach, see Angrist’s Lifetime Earnings and the Vietnam Era Draft Lottery (1990). This paper estimates the effect of veteran status during the Vietnam war on earnings using birthday as an instrument to get at the causal effect of the draft. This paper was part of the citations for Angrist and Imbens’ share of the Nobel Prize in Economics in 2021.

Rational Magic

Author: Tara Isabella Burton

Publication: The New Atlantis

Published: 2023

Link: Click here

The rationalist community is a collection of blogs and websites that formed in the late 2000s to discuss cognitive biases, utilitarian decision-making, AI, etc. This community is largely siloed but has gotten press over the years by overlapping with better-known ideas/causes such as effective altruism, longtermism, and AI safety.

In recent years, some in this community have grown disillusioned by the perceived parochial thought and methods of the rationalists such as focusing exclusively on narrow quantifiable metrics to measure the efficiency of charitable donations. The disaffected have embraced different sorts of experiences and ways of interacting and finding meaning with the world such as through traditional religion or even the occult. Rational Magic surveys the rise of the postrationalists in recent years and provides a helpful narrative connecting these internet subcultures to broader ideas and figures.

Burton offers the following contrast:

The rationalists dreamed of overcoming bias and annihilating death; the postrats are more likely to dream of integrating our shadow-selves or experiencing oneness.

While Burton’s narrative is often sympathetic to both groups, I can’t but read this contrast as a caricature of the divide between logical empiricism and existentialism in the mid-20th century or between analytic and continental philosophy more generally. Much like cryptocurrency and blockchain enthusiasts and other insular online communities, the level of discourse with both rationalists and postrationalists is often amateurish and peppered with in-group references. It is interesting, however, when their ideas spill out into the mainstream. Unlike Burton (and perhaps David Brooks), though, I find the ideas and approaches of both groups suspect at best.

Can a good philosophical contribution be made just by asking a question?

Authors: Joshua Habgood-Coote, Lani Watson, and Dennis Whitcomb

Publication: Metaphilosophy

Published: 2022

Link: Paper - Commentary

This paper asks a single question in its title and leaves the response to the reader. Is it a hoax? Likely not, since it makes an interesting point that’s certainly relevant to metaphilosophy. Is it the shortest legitimate paper ever published? Technically yes, but not quite; the journal editor published a letter from the authors as commentary on the idea of the paper. The commentary has enough whimsey to appear sincere and enough heft to be taken seriously.

The authors compare their paper to embodied art insofar as the question in the title contains or expresses the content of the paper such as Duchamp’s sculpture Fountain. A more interesting comparison is with speech acts i.e. utterances that are made true or come to pass by the act of uttering them. For instance, “I have typed this” and “you are reading this” are both performative analogues of the speech act. Notice that these cases are self-referential in the same way that asking the paper’s question answers itself.

The Great Convergence: Global Equality and Its Discontents

Author: Branko Milanovic

Publication: Foreign Affairs

Published: 2023

Link: Click here

Rising income and wealth inequality is a commonplace narrative for explaining, among other things, the increase in social strife within liberal democratic countries. Note though that narrative has come under fire a bit in the US due to Gerry Auten and David Splinter’s recent paper.

In this article, Milanovic argues that the income inequality narrative is turned on its head when viewed globally. In the 30 year period from 1988 to 2018, Western countries saw the lower end of earners fall in the global distribution of income while the upper end remained relatively unchanged. This is due to rising incomes among middle- and high-earners in Asia, particularly in China and India.

This finding suggests a secondary source for economic discontent:

Western countries are increasingly composed of people who belong to very different parts of the global income distribution.

Uncertainty in the international relations landscape from Ukraine to Israel and elsewhere make it difficult to predict how global incomes will shape out in the coming years. Nevertheless, this is an interesting observation and a stylized fact worth explaining.

Buy me a coffee

Buy me a coffee