Interesting Articles I've Read in 2024

∷

Books: 2025 - 2024 - 2023 - 2022 - 2021 - 2020 - 2019 - 2018

Below is a collection of interesting articles I’ve read in 2024. Three papers are on differential privacy and adjacent topics. There’s a recent method for differentially private SGD utilizing methods from private query answering, an intuitive watermarking scheme for language models, and a paper from 1986 that proposed $k$-anonymity before it was formalized as a criterion for de-identification. Several papers are on the history of ideas ranging from early twentieth century pragmatism, the synergies and antagonisms between poetry and philosophy, and the relation between periodicals and intellectual progress to the deaths of Analytical Marxism and Effective Altruism.

Two papers concern mathematical logic. One from 1924 reduces quantified formulas to functions and is foundational for functional programming. Another explores the continuum hypothesis as an axiom of set theory. Finally, there’s a look at the early history of cocaine and culture, a nineteenth century legal battle between evolutionary theory and spiritualism, and reflections on the generational dominance of US politics. If you have some thoughts on my list or would like to share yours, send me an email at brettcmullins(at)gmail.com. Enjoy the list!

Finding a needle in a haystack or identifying anonymous census records

Author: Tore Dalenius

Publication: Journal of Official Statistics

Published: 1986

Link: Click Here

Lately, I’ve been reading papers in the statistical disclosure literature before differential privacy i.e. before 2006. This short paper introduces what would later be called $k$-anonymity, a criterion of privacy that was/is widely applied especially to government datasets. $k$-anonymity would be formalized in a series of papers by Latanya Sweeney and others in the late 1990s and early 2000s.

For a dataset containing sensitive information about individuals such as Census data, a notion of privacy called de-identification seeks to make it impossible to link a record in the dataset with an individual. Removing personally-identifiable information (PII) from the data may not be sufficient to prevent re-identification, since some records may still be unique or only appear a few times.

For example, if Alice knows Bob’s age and zip code, she may be able to identify him in a dataset even if his name has been removed. If Bob’s age and zip code combination is unique, then Bob would be the only 45 year old living in downtown Chicago and Alice could identify him perfectly. If, however, there are only a few people that share Bob’s age and zip code, Alice could still identify Bob with high probability. In the latter case, age and zip code are quasi-identifiers, which Dalenius coins in this paper.

Dalenius sketches a few ways to check if $k$-anonymity is satisfied and considers what to do if it’s not. A dataset is said to be $k$-anonymous for a set of attributes if, for every record in the dataset, there are at least $k-1$ other records that share the same values on the given attributes. In the example above, if the dataset is 2-anonymous, then Bob would not be perfectly identifiable because there would be at least one other record that shares his age and zip code.

If anonymity is not satisfied and privacy is potentially compromised, what should we do with the data? We could throw the records away, but that could have unpredictable downstream effects. We could suppress quasi-identifier attributes in the records. Not only does this inherit the former issue, but imputation could be used to recover the suppressed data. We could “perturb the data”, by which he means something like generating synthetic data. Finally, we could implement an encrypted computation scheme such as homomorphic encryption to compute statistics while not directly exposing the data. Unsurprisingly, these latter two options also run into privacy issues of their own.

One generation has dominated American politics for over 30 years

Publication: The Economist

Published: 2024

Link: The Economist

This article is an interesting time capsule. Published on July 4th of this year, Biden had yet to withdraw from the Presidential race and the attempted assassination of Trump was a week or so away. At the time, Biden and Trump were historically unpopular candidates.

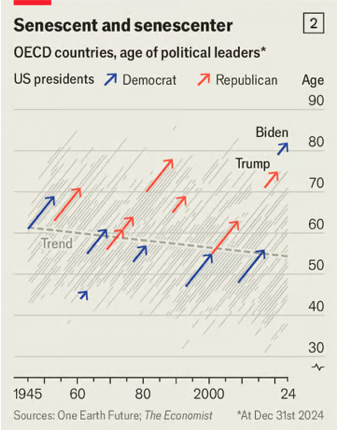

The pair also have in common their decade of birth, the 1940s, one which has dominated US politics over the past thirty years. With the exception of Obama, every president from Clinton onward - as well as many challengers on the opposing ticket - were born in the 1940s. The article points to this generation’s hold on power as a source of political disfunction, rehashing the rhetoric and disputes of the tumultuous late 1960s.

As this generation aged, so did the average age of politicians in the US. While US presidents are getting older on average each election over the past fifty years, the trend for OECD political leaders is slightly downward sloping. Moreover, of these countries, the US has the oldest legislature on average in both upper and lower houses. The Economist explores this in depth with their recent podcast Boom!; however, I haven’t had a chance to listen yet.

Taking Pragmatism Seriously Enough: Toward a Deeper Understanding of the British Debate over Pragmatism, ca. 1900-1910

Author: Ymko Braaksma

Publication: Journal of the History of Ideas

Published: 2024

Link: Click Here

In the early twentieth century, a leading Idealist philosopher in Britain, F. H. Bradley, posited that he may be a pragmatist after all. Roughly speaking, idealism is a speculative approach to philosophy that studies reality as a whole and often in a top-down fashion. Bradley’s idealism sets such a high bar for knowledge and truth that he thinks it practically unattainable. Rather than give in to skepticism, Bradley adopts a pragmatic view as the best one can do.

Braaksma argues that F. C. S. Schiller’s response is instructive for understanding pragmatic thought. Schiller denies that Bradley is a pragmatist because Bradley’s account of truth and knowledge still aims to attain absolute certainty. The pragmatism of Schiller, John Dewey, and William James conceives the aim of thought through an evolutionary and psychological frame “whose main function is helping an organism live”. On this account, pragmatism is less about ends and more about the means of one’s theories of truth and knowledge.

Recent work by Cheryl Misak and others has sought to reexamine or perhaps revive pragmatism along the lines of C. S. Peirce’s program. Braaksma contends that Schiller’s program is likewise worthy of reexamination.

Improved Differential Privacy for SGD via Optimal Private Linear Operators on Adaptive Streams

Authors: Sergey Denisov, Brendan McMahan, Keith Rush, Adam Smith, and Abhradeep Guha Thakurta

Publication: NeurIPS Proceedings

Published: 2022

Link: arXiv

The predominant method for privately training a machine learning model is differentially private stochastic gradient descent (DP-SGD). This method adds Gaussian noise to the bounded gradient at each step with noise calibrated to one’s privacy budget (as well as the bounding details), viewing each gradient measurement as a separate query.

This paper introduces a new method for differentially private SGD that treats gradient measurement as a prefix query on the sequence of gradients. The key observation is that the gradient at each step is a sum of prior gradients. To optimally add noise to the gradient, the authors use a variant of the matrix mechanism, a method for optimally answering linear queries under differential privacy. The matrix mechanism utilizes factorization to allow one to answer a workload of queries with low sensitivity and reconstruct answers to the desired workload with minimal noise.

This approach to differentially private SGD is now called DP-MF for matrix factorization. In addition to vanilla SGD, this framework can accommodate SGD with momentum or any extension that uses a linear function of the gradients. Empirically, this approach to noise addition yields significantly higher accuracy on the test set compared to existing methods.

This is a really cool paper because it shows how improvements in fundamental tasks such as private query answering (which is what I work on) can be applied to make non-trivial advances in other areas.

Cocaine: a cultural history from medical wonder to illicit drug

Author: Douglas R. J. Small

Publication: Aeon

Published: 2024

Link: Click Here

During the forty year period from 1880 to 1920, cocaine went from an obscure compound to a wonder drug, then, to a controlled substance and the attributed cause of social ills. While cocaine was known as a painkiller and stimulant, Karl Koller, an ophthalmologist and colleague of Sigmund Freud in Vienna, discovered its properties as a local anesthetic in 1884. This finding changed how some surgeries were practiced, since patients were no longer required to be fully sedated.

by Jack Tracy and Jim Berkey

Cocaine quickly grew in popularity. In Arthur Conan Doyle’s The Sign of the Four (1890), Sherlock Holmes’ use of cocaine intravenously was meant to depict him as a modern man informed by late Victorian science. By 1904, however, Watson recounts in the short story The Missing Three-Quarter that Holmes had to be weaned off of cocaine due to dependence. This illustrates how quickly the worm turned on this panacea. Over the next two decades, the sale of cocaine would be restricted to medical professionals in many countries and alternative local anesthetics would be introduced.

This article is a pitch for the author’s recent book Cocaine, Literature, and Culture, 1876-1930 (2024). In many ways, it feels like I’m the target audience by connecting an interesting topic with the history of science and Sherlock Holmes. This article is doubly fitting given that I read Benjamin Blood’s reflections on philosophical reasoning under anesthesia from 1874 this year.

On the building blocks of mathematical logic

Author: Moses Schönfinkel

Original Title: Über die Bausteine der mathematischen Logik

Publication: Mathematische Annalen

Published: 1924

Translated in From Frege to Gödel: A Source Book in Mathematical Logic, edited by Jean van Heijenoort and introduced by W. V. O. Quine

Link: Internet Archive

A typical exercise in a first course in mathematical logic is to prove that propositional logic can be expressed using only two of the typical operators such as $\neg, \lor$ i.e. not and or. In 1913, Henry Sheffer proved that there is a single operator — now called NAND — capable of expressing all of propositional logic given by $A \mid B = \neg A \lor \neg B$ for propositions $A, B$.

In this paper, Schönfinkel proves a similar but deeper result for first-order logic that reduces quantification, variables, and predicates to functions. In this setting, first-order logic can be expressed using three functions: a constant function $K$, a substitution function $S$, and a version of NAND $U$ for quantified predicates. Schönfinkel’s presentation is elegant, powerful, and philosophically reflective.

If the idea of building up formulas from functions sounds familiar, it is the basis for functional programming. In 1927, Haskell Curry discovered Schönfinkel’s paper and began developing combinatory logic, where these sorts of reducing functions are called combinators. Stephen Wolfram has written an interesting history of Schönfinkel centered around this paper. I discuss Schönfinkel’s paper in my survey of research from 1924.

John Rawls and the death of Western Marxism

Author: Joseph Heath

Publication: In Due Course

Published: 2024

Link: Substack

In the midst of Cold War Rationality in the late 1970s and 1980s, Marxism saw a resurgence in the form of analytical Marxism. This approach offered a critique of capitalism largely grounded in the notion of exploitation with the rigor and toolset of analytic philosophy and microeconomic theory. The problem is that the approach didn’t pan out: results were unconvincing and internal coherence was lacking.

Heath offers a amusing history (and lament) of this Marxist wave. He attributes the hastening of the crash to the competing Rawlsian program. While A Theory of Justice (1971) may seem boring in contrast to the high theory of Marxism, it offered a critique of unfettered capitalism from a liberal direction.

What Rawls had provided, through his effort to “generalize and carry to a higher level of abstraction the familiar theory of the social contract,” was a natural way to derive the commitment to equality, as a normative principle governing the basic institutions of society. Rawlsianism therefore gave frustrated Marxists an opportunity to cut the Gordian knot, by providing them with a normative framework in which they could state directly their critique of capitalism, focusing on the parts that they found most objectionable, without requiring any entanglement in the complex apparatus of Marxist theory.

For more on Cold War Rationality, see S. M. Amadae’s Rationalizing Capitalist Democracy: The Cold War Origins of Rational Choice Liberalism (2003), which made my books list in 2021.

How the continuum hypothesis could have been a fundamental axiom

Author: Joel David Hamkins

Publication: Journal for the Philosophy of Mathematics

Published: 2024

Link: arXiv

The philosopher Penelope Maddy has argued that the axioms of set theory are in some sense historically contingent. Hamkins builds on this line of thought by considering a plausible alternative history of calculus, where the continuum hypothesis is taken as an axiom. The continuum hypothesis (CH) states that there is no set whose cardinality (or size) is strictly between that of the integers and the real numbers.

From the standard axioms of set theory (ZFC), CH is independent, meaning that it can be neither proven nor disproven. The consistency of ZFC with CH was proven by Kurt Gödel in 1938, and the consistency of ZFC with $\neg$CH was proven by Paul Cohen in 1963, inventing the technique of forcing along the way. While CH is formally independent of set theory, some hold that it is nonetheless true or that we should reason about a multiverse of set theories.

Credit: Joel David Hamkins

The proposed alternate history begins with the development of infinitesimal calculus in the 17th century. Mathematicians were able to develop a rigorous foundation for this calculus in terms of hyperreal numbers. The hyperreals are an extension of the real numbers that include infinitesimals, numbers that are smaller than any positive real number but not zero and fill “every conceivable gap in the numbers”. How does CH enter the picture? In ZFC, the hyperreals are underspecified; however, ZFC+CH implies a unique characterization of the hyperreals. Hamkins argues that this development could have led to the acceptance of CH as a fundamental axiom of set theory.

A general worry about this sort of paper being simultaneously a high-level thought experiment and in the weeds of set theory is that subtly unsound reasoning may sneak in unnoticed. As someone familiar with these topics but not a set theorist, it took some time to digest this paper and verify results that were casually mentioned.

Charles Darwin and Associates, Ghostbusters

Author: Richard Milner

Publication: Scientific American

Published: 1996

Link: JSTOR

In the late nineteenth century, spiritualism was having a cultural moment, partly as a reaction to rapid progress in the sciences. As part of this progress, evolutionary theory generated much controversy with its (largely) materialist account of life and change. This short article describes an episode in the history of science when evolutionary theory collided with spiritualism in “one of the strangest courtroom cases in Victorian England.”

In 1876, Ray Lankester, Thomas Huxley’s lab assistant and a junior member of Charles Darwin’s circle, sought to debunk a purported psychic. Partly, this was to win the elder Darwin’s favor as Darwin’s brother-in-law, Hensleigh Wedgwood, had recently been swept up in the seance fervor. Lankester and a friend confronted the American psychic Henry Slade during a London event and later brought a complaint against him under “an old law intended to protect the public from traveling palm readers and sleight-of-hand artists.”

The trial was sensational. Lankester nearly botched the debunking by neglecting to identify Slade’s slight-of-hand, provide any substantial evidence, or even consistent testimony. In addition, the proceedings featured a stage magician who demonstrated how Slade could have deceived his audience and testimony on the character of Slade from Alfred Russel Wallace, an avowed spiritualist who also formulated natural selection independently of Darwin in the 1850s. The judgement went against Slade but would later be overturned.

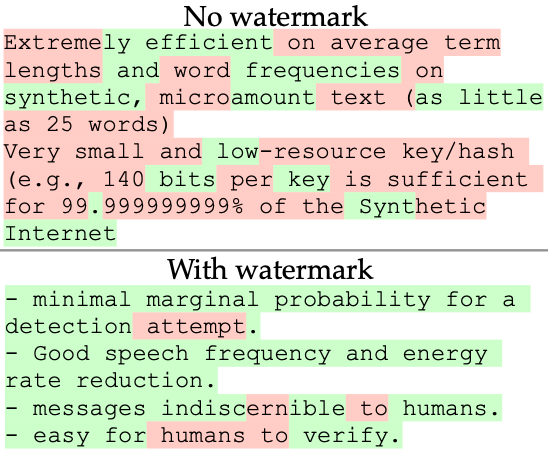

A Watermark for Large Language Models

Author: John Kirchenbauer, Jonas Geiping, Yuxin Wen, Jonathan Katz, Ian Miers, and Tom Goldstein

Publication: ICML Proceedings

Published: 2023

Link: arXiv

The idea of watermarking a physical image or document to prevent forgery has been around for centuries. For generating watermarked images from a model, this looks something like slightly perturbing the image in a way that’s imperceptible to the human eye but detectable by the model owner. Doing something similar for language models poses a challenge because predicting the next word in a sentence offers dramatically less information to work with than all the pixels across channels in an image.

Kirchenbauer et al. (2023)

Over the past three years, several approaches to watermarking language models have been proposed. This paper introduces Green-Red Watermarking, where you randomly divide all tokens in the model’s vocabulary into two sets: green and red. During inference, you slightly increase the probability of choosing a green token. The result is text that’s detectible by the model owner by looking at the ratio of green to red tokens. Other approaches offer different trade-offs between the security of the watermark and the effect on the model’s utility, including using ideas from differential privacy.

On the reciprocal influence of the periodical publications, and the intellectual progress of this country

Author: William Stevenson

Publication: Blackwood’s Magazine

Published: 1824

Link: Google Books

One way to measure how the intellect of a country changes over time is to look at what’s published in its periodicals. By intellect, Stevenson is not as much talking about scientific advancements as the overall quality of discourse. Looking back at the literature from the 1770s, Stevenson observed a dramatic rise in the quality of articles published in London periodicals over the fifty year period. Is published writing merely a reflection of changing intellect, or does causation go both ways?

by Johan Christian Dahl

Stevenson finds the literature from fifty years prior to be a bit pedestrian with respect to both topics and quality relative to the writing of his day. One driver of this is the notion of a moral convulsion. This is an event that causes radical social upheaval and a reevaluation of one’s moral landscape, which Stevenson compares to a volcano erupting and leaving fertile soil in its wake. During the time period in question, a moral convulsion was brought on by the French Revolution and the resulting turmoil.

How might periodicals influence the public intellect? Unlike books, periodicals often expose the reader to a variety of topics and the occasional gem of an article that might not have been sought out otherwise. In this way, ideas can diffuse through society. There are other avenues as well. As the number of published articles grew, outlets specialized to differentiate their collections and capture a specific audience. The resulting exclusivity increased both the prestige and utility of publishing one’s writing in this format, leading brighter minds to contribute to periodicals.

On this latter point, I couldn’t help but think that today we’ve seen some harmful effects emerge from this process of specialization insofar as the public intellect becomes fractured into echo chambers, cognitive islands, and epistemic bubbles. Regardless, this article lays out a strong case for why one should read periodicals, both then and now, and especially broader interest magazines such as The Atlantic and Harper’s.

The Deaths of Effective Altruism

Author: Leif Wenar

Publication: Wired

Published: 2024

Link: Click Here

This article chronicles the rise of effective altruism from the perspective of a philosopher who had similar aspirations in the 1990s. The cinematic synopsis:

The EA saga is not just a modern fable of corruption by money and fame, told in exaflops of computing power. This is a stranger story of how some small-time philosophers captured some big-bet billionaires, who in turn captured the philosophers—and how the two groups spun themselves into an opulent vortex that has sucked up thousands of bright minds worldwide.

Effective altruism began with the combination of Peter Singer’s shallow pond argument and quantified analyses of the effectiveness of charities in saving lives. Toby Ord is a philosopher who pushed in this direction in the 2000s. In the 2010s came William MacAskill, who was more pitchman than philosopher. An amusing passage:

Let me give a sense of how bad MacAskill’s philosophizing is. Words are the tools of the trade for philosophers, and we’re pretty obsessive about defining them. So you might think that the philosopher of effective altruism could tell us what “altruism” is. MacAskill says, “I want to be clear on what “altruism” means. As I use the term, altruism simply means improving the lives of others.” No competent philosopher could have written that sentence. Their flesh would have melted off and the bones dissolved before their fingers hit the keyboard.

Effective altruism mingled with other pseudointellectual online communities and exhibited similar poor reasoning:

Strong hyping of precise numbers based on weak evidence and lots of hedging and fudging. EAs appoint themselves experts on everything based on a sophomore’s understanding of rationality and the world. And the way they test their reasoning—debating other EAs via blog posts and chatboards—often makes it worse. Here, the basic laws of sociology kick in. With so little feedback from outside, the views that prevail in-group are typically the views that are stated the most confidently by the EA with higher status. EAs rediscovered groupthink.

By the late 2010s, effective altruism adopted parts of longtermism. In some sense, longtermism is EA’s final form:

Longtermism lays bare that the EAs’ method is really a way to maximize on looking clever while minimizing on expertise and accountability.

Importantly, Wenar is not claiming that charity is not effective. Rather, the point is that any sort of intervention has both positive and negative effects. A better approach to charitable giving would be to be as transparent as possible about all known effects.

Poetry and Philosophy

Author: Arthur Cecil Pigou

Publication: The Contemporary Review

Published: 1924

Link: Google Books

There is an apparent tension between philosophical and poetic attitudes: philosophy prizes truth to the detriment of beauty, while poetry seeks to stir emotion without regard for reason. This article reflects on the degree to which the poetic strays one from the truth.

from the Online Library of Liberty

While poetry cannot do all of the heavy lifting of philosophy, Pigou finds a place for poetry in his view of the epistemic regress. Being a strong foundationalist (as was common at the time), Pigou holds that there are two sorts of beliefs or propositions: those inferentially justified and those immediately justified. The former are the stuff of philosophy, logic, mathematics, and the sciences: the this in this follows from that. The latter can be more ephemeral and is the stuff of metaphysics, foundations, methodology, and moral philosophy.

The poetic mode can provide data to support or frame which beliefs are justified immediately. Another place for poetry concerns how hypotheses or positions are discovered rather than their ultimate logical justification. Philosophers of science refer to these as the context of discovery and the context of justification.

Poetry and Philosophy is an excellent companion to C. P. Snow’s The Two Cultures (1959), since it provides a model of how the humanities and sciences can be complementary endeavors. I discuss Pigou’s paper in my survey of research from 1924.

Buy me a coffee

Buy me a coffee