Interesting Articles I've Read in 2021

Below is a collection of interesting articles I’ve read in 2021. The dominant theme this year is differential privacy and learning theory (which is unsurprising given my current research). Several fall into this category: A Simple and Practical Algorithm for Differentially Private Data Release, Privacy in Pharmacogenetics, A dynamic logic for learning theory, and Exploring Connections Between Active Learning and Model Extraction. Two articles look at issues in recent academic history: The Prehistory of biology preprints and Scientific community in a divided world. The Cult, the Cultic Milieu and Secularization is a foundational paper in the sociology of religion and esotericism. The remaining papers span Hellenistic historiography, evidence for axioms in mathematics, and a new typology for pseudoscience and pseudophilosophy.

The papers below are presented in chronological order.

The Cult, the Cultic Milieu and Secularization

Author: Colin Campbell

Publication: A Sociological Yearbook of Religion in Britain

Published: 1972

Link: See below

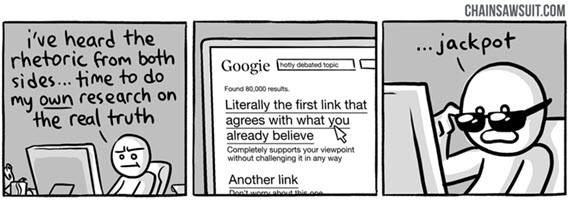

This paper investigates the cultic milieu while giving a sociological account of the cult. The cultic milieu is composed of “all deviant belief systems and their associated practices” in a society. While the cult is an entity often best modeled as an authoritative institution or religious sect, the cultic milieu is united by notion of seekership with a hint of mysticism. The sentiment of seekership is encapsulated in the contemporary “do your own research.”

The author speculates on the dynamics of the cultic milieu. Is the cultic milieu a necessary part of a society with dominant authoritative structures of science and religion? In the closing, the author argues that the tolerance that (sometimes) accompanies secularization may allow the cultic milieu to flourish compared to times when religion was dominant. Whereas the church as a long history of stamping out heresies, scientists have no such obligation or established practice in general.

This is a difficult paper to track down. Two libraries I have access to had a print copy in special collections (presumably in a basement somewhere) but COVID made getting access too difficult. I eventually found it reprinted in The Cultic Milieu: Oppositional Substructures in an Age of Globalization edited by Jeffrey Kaplan and Heléne Lööw.

Believing the Axioms I

Author: Penelope Maddy

Publication: The Journal of Symbolic Logic

Published: 1988

Link: Click Here

This article presents arguments for and against axioms grounding the foundations of mathematics from the perspective of the Cabal seminar. Importantly, candidate axioms and reasons for their acceptance are not clear cut; rather, many reasons rely on heuristics that capture intuitive ideas of sets or collections.

“The axiomatization of set theory has led to the consideration of axiom candidates that no one finds obvious, not even their staunchest supporters. In such cases, we find the methodology has more in common with the natural scientist’s hypothesis formation and testing than the caricature of the mathematician writing down a few obvious truths and proceeding to draw logical consequences.”

Maddy notes that ZFC is not in an epistemically privileged position even though many texts “derive” it from intuitions. What constitutes good evidence for an axiom? Here are some reasonable heuristics: the iterative conception (building sets from nothing), limiting the size of sets (to rule out unruly sets like the set of all sets), and one step back from disaster (being as permissive as possible).

Our intuitions often concern the behavior of relatively small sets. In discussing evidence for and against the continuum hypothesis (and GCH) and large cardinal axioms, an interesting heuristic introduced is the idea of uniformity which holds that what happens in one part of the iterative hierarchy (for small sets perhaps) should happen throughout.

This paper continues in a more technical second part discussing determinacy axioms and additional deeper topics in set theory. See this article for a more recent similar discussion from Maddy.

Scientific community in a divided world: Economists, planning, and research priority during the cold war

Authors: Johanna Bockman and Michael A. Bernstein

Publication: Comparative Studies in Society and History

Published: 2008

Link: Click Here

This article paints a picture of mathematical economics research during the Cold War through the correspondence and friendship of Tjalling Koopmans and Leonid Kantorovich. Koopmans was a Dutch economist in the US associated with the Cowles Commission. Kantorovich was a Russian scientist with wide-ranging contributions to mathematics, engineering, and operations research. During WWII, both were involved in designing techniques for optimal resource allocation i.e. early mathematical programming.

Following the war, this research slowly became declassified and the West learned of Soviet work and vice versa. This is not to say that the work from the other world was immediately accepted. For instance, Koopmans was met with much resistance when attempting to publish a translation of Kantorovich’s work, particularly with the claim of Kantorovich’s priority with respect to early results in linear programming.

In 1975, Koopmans and Kantorovich shared the Nobel prize in economics for contributions to optimal allocation. This created much optimism among mathematical economists that they had crafted the toolset to study general economic processes for all types of economic systems:

“for economists, the seeming convergence of ideas in their disciplines, from East and West, appeared to be less the result of particular historical and political forces than the outcome of intellectual trends of longer gestation. As one Yale University economist put it shortly after Koopmans won the Nobel Prize, “The techniques of activity analysis [perfected by Kantorovich and Koopmans] exemplify the pure theory of decision-making, and, as such, are remarkably indifferent to economic institutions and organizational forms.” It was thus “one of the great achievements of this methodological revolution” that “economists of the East and West” could enjoy “continued dialogue— free of ideological overtones” (Scarf 1975: 712). Scientific rigor and objectivity had at long last triumphed over sectarian posturing; a new era of understanding, comity, and peace had potentially arrived.”

As the Cold War ended, economics shifted focus from “the economy” to analyses of “markets” and, with this, moved away from the perspective of operations research and economic planning. The authors argue that this Cold War dialogue and international cooperation has largely been forgotten and buried under “the end of history” style triumphalism.

A Simple and Practical Algorithm for Differentially Private Data Release

Authors: Moritz Hardt, Katrina Ligett, and Frank McSherry

Publication: NIPS (NeuroIPS)

Published: 2012

Link: Click Here

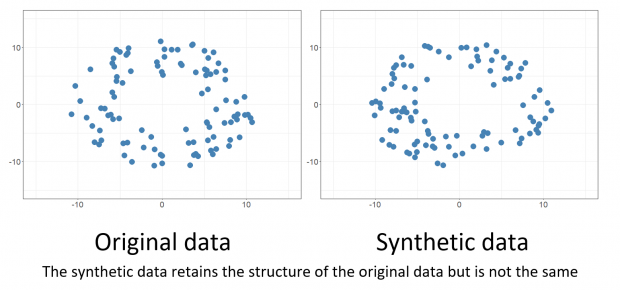

This paper introduces the MWEM algorithm (multiplicative weights exponential mechanism) to generate differentially private synthetic data that captures information from a set of counting-based queries. One way to think about this algorithm is that it’s analogous to Bayesian updating where at each update the model is shown the most “surprising” evidence.

This algorithm begins by initializing a uniform distribution over a vector representation of the data, then applies the exponential mechanism over the set of queries to privately choose the worst performing query. The chosen query is measured with Laplace noise added and the data representation is then updated using the multiplicative weights update rule. After several iterations, the result is a data representation that has privately captured the information from the queries.

Ideas from this approach continue to be used and developed in state of the art synthetic DP algorithms.

Privacy in Pharmacogenetics: An End-to-End Case Study of Personalized Warfarin Dosing

Authors: Matthew Fredrikson, Eric Lantz, Somesh Jha, Simon Lin, David Page, and Thomas Ristenpart

Publication: USENIX Security

Published: 2014

Link: Click Here

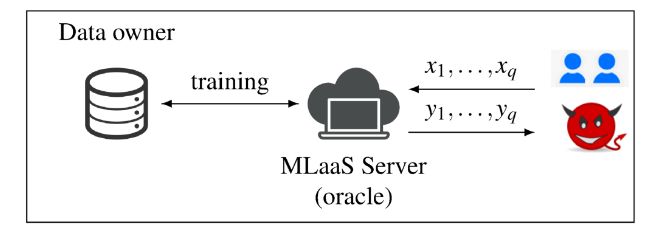

This paper introduces model inversion attacks where an adversary with access to a model (such as behind an API) uses it to learn sensitive features in the input data. The authors study personalized medicine models used to predict a patient’s optimal Warfarin dosage from demographic information and genetic markers. Given that the demographic information of a patient is generally observable as well as a patient’s actual optimal Warfarin dosage (from a prescription for instance), the adversary can “invert” the model (pictured below) to predict which sensitive genetic markers are most likely given the other information.

If such models are widely available for use, then the privacy of patients is at risk. The authors consider using differentially private machine learning algorithms to build the dosing models. This approach has the benefit of masking the effect of uncommon observations on the model’s prediction; however, the authors found that the best methods at the time incurred too much inaccuracy in dose prediction to make the privacy gains worthwhile.

The Prehistory of biology preprints: a forgotten experiment from the 1960s

Author: Matthew Cobb

Publication: PLoS Biology

Published: 2017

Link: Click Here

In 1961, the NIH piloted a program called Information Exchange Groups (IEGs) where individual academics would subscribe to an IEG that matched their research area and could submit/receive papers from other members of their group. After some initial hesitation on the part of established researchers, IEGs became rather popular in many areas of biology and medicine.

However, IEGs received severe pushback with many prestigious journals who published editorials attacking preprint systems as essentially ruining science. After resolutions from journals not to publish papers associated with preprint systems, nearly all IEGs died out and the NIH pilot was shuttered in 1967.

In 1999, the then head of the NIH expressed interest in again bringing preprints to biology with a system similar to physics’ arXiv which was started in 1991 (the same year as the world wide web). After waves of continual pushback from journals and others, by 2013, PeerJ Preprints and bioRxiv (among others) successfully launched providing preprint systems for biology, catching it up to fields such as math and physics.

This paper is an excellent reminder and case study in the difficulties of implementing and the benefits to researchers of open models of scientific communication. Given the urgency of communicating results from COVID research, the NIH has continued to think about how to implement preprint systems. This article was included as reading this year in the Fermat’s Library Journal Club.

A dynamic logic for learning theory

Authors: Alexandru Baltaga, Nina Gierasimczukb, Aybüke Özgünac, Ana Lucia Vargas Sandovala, and Sonja Smets

Publication: Journal of Logical and Algebraic Methods in Programming

Published: 2019

Link: Click Here

This paper introduces a dynamic modal logic (called DLLT) to model formal learning theory. Formal learning theory (or more recently topological learning theory) studies the learnability/solvability of formal hypotheses/problems as information known with certainty accumulates.

DLLT is a logic based on subset space logics which come imbued with a topological semantics (which naturally looks similar to what’s going on in the background in learning theory). Typical subset space logics model the notion of learnability with certainty; however, by adding in a learning/conjecture operator DLLT additionally captures learnability in the limit (inductive learnability) as well as convergence to correct conjectures, two foundational notions in learning theory.

There have been several iterations of this paper at various venues since 2017. A full version (much more comprehensive than this one) was published in 2021.

Bullshit, Pseudoscience and Pseudophilosophy

Author: Victor Moberger

Publication: Theoria

Published: 2020

Link: Click Here

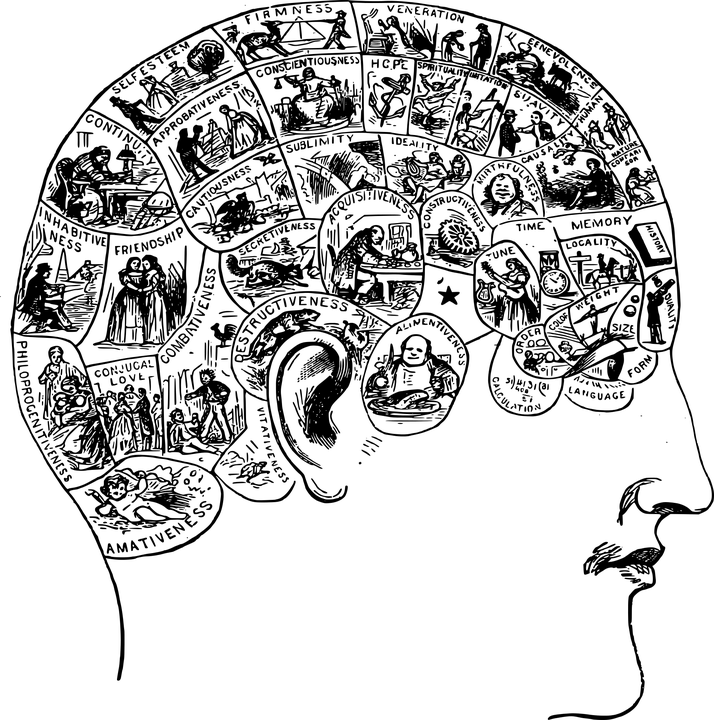

This article provides a taxonomy of pseudoscience and pseudophilosophy as varieties of bullshit. Though this paper is in the tradition of On Bullshit, Moberger defines bullshit with respect to epistemic unconscientiousness rather than disregard for truth values. Importantly, in this account, bullshit is contextual.

In this conception, pseudoscience/pseudophilosophy is bullshit with scientific/philosophical pretensions. The author describes two interesting varieties of pseudophilosophy: scientistic and obscurantist. The former falls under the category of pop science e.g. The God Delusion and The Grand Design. The latter “poses as philosophy by using arcane and quasi‐technical terminology and jargon, which can easily make the most trivial claims appear profound.” In contrast, “scientistic pseudophilosophy tends not to pose as philosophy, however, since it often involves a hostile attitude toward the subject.”

One of the difficulties of Moberger’s account inherent in contextualizing the definition of bullshit is that even the practitioner of obscurantist pseudophilosophy may believe that they are reasoning soundly. One can imagine this pejorative unjustly being assigned to new approaches and notation as well as ideas imported from other disciplines. Are strange but new approaches that eventually supersede old approaches pseudoscience/pseudophilosophy until they become accepted as legitimate solutions?

A short-form version of this article was published in Psyche.

Exploring Connections Between Active Learning and Model Extraction

Authors: Varun Chandrasekaran, Kamalika Chaudhuri, Irene Giacomelli, Somesh Jha, and Songbai Yan

Publication: USENIX Security Symposium

Published: 2020

Link: Click Here

Model extraction is a privacy attack on machine learning models where an adversary with query access attempts to reconstruct the model. This paper introduces a formal threat model for model extraction creating a mapping between model extraction and query synthesis (QS) active learning. Importantly, this sort of active learning is distribution-agnostic which is to say that points are not queried with reference to some known distribution over the data. This is in contrast to the PAC approach which assumes a distribution is known.

This mapping between tasks allows us to say that there exists a model extraction attack of a given number of queries, error, confidence, and hypothesis class just in case there exists an active learner of a corresponding query complexity, error, confidence, and hypothesis class. So an adversary may import existing active learning techniques as model extraction attacks. The authors consider several such attacks for a variety of hypothesis classes (linear, non-linear, tree-based, and so forth).

Poseidon’s wrath: How a vanished ancient Greek city helps us think about disasters

Author: Guy D Middleton

Publication: Aeon

Published: 2021

Link: Click Here

This article discusses how the Greeks and Romans thought about natural disasters through the case of Helike. Helike was one of the Achaean city-states but was destroyed by some natural disaster. Accounts of its demise from Aristotle (more likely pseudo-Aristotle) and others hold that the sea swept Helike away following an earthquake. This account seemed reasonable since both earthquakes and tsunamis were under the purview of Poseidon.

“The ancient world was not so different from today in that its people experienced and sought to understand natural disasters brought on by earthquakes and tsunamis. They did so in ways that were influenced by their milieu – by their traditions and education, and by their conversation and curiosity. These natural disasters were part of the story of the developments of religion, cultural norms and social behaviour, and also of the development of what we see as rational or scientific thinking.”

In the 1950s, archaeologists began searching for Helike without much luck. Only in the 1980s and 1990s did more evidence began to be uncovered with artifacts which appear to be from the ancient city were found quite far inland. This led to a new hypothesis under consideration where an inland flood washed the city away due to severe earthquakes breaking natural dams; however, the reconstruction of the events is still an open question.

Buy me a coffee

Buy me a coffee